Standard Data ETLs

Principle

In Actito eco-system we call ETL our standard flat files exchange flows that aim to feed Actito Standard Data model.

ETL stands for Extract -> Transform -> Load.

At Actito, the E represents the data extraction from your own system to one or more flat files that are uploaded to a file server shared between you and Actito. The T stands for the extracted file optional transformations (string normalizations, value mapping, date/language code/country code formatting and many more). And the L is those flat files data import into your Actito licence data model.

An Actito ETL is :

- standardized, in order to ease mapping and validation between your system's data and Actito data model.

- automated, so as to say that you have no manual operation to launch.

- scheduled, stating that Actito will run the ETL at the frequency and time you specify, or triggered, stating that Actito will run the ETL when a file is detected.

- monitored, enforcing that you can be notified of any execution's result (via e-mail reports, finished execution webhook, execution results retrieve API routes).

An Actito ETL allows you to feed your Profile and Custom tables.

Extract and transfer

For each table you wish to feed, you should provide a single CSV flat file in which each line represents a row to import into an Actito destination table, and each column is mapped to an attribute of this table.

You should always take care to extract only delta data. As it has huge impact on import performance, avoid to make full table extracts except for initial setup. Imported files are anyway limited to 100 Mb.

The file transfer must be done by using a remote file server that should be declared by defining a file transfer server configuration and at least one location (i.e. a folder on the file server).

That location will have to be referenced within the ETL definition, and Actito will try to find the specified file on that server and location each time the ETL is executed.

Check the File Transfers section for more information on setting up remote server configurations and locations.

Withing the ETL file transfer definition, you will be able to specify a pattern for each file to retrieve (multiple flat files can be included in the same compressed archive, or compressed individually).

The name of a file to retrieve can include a placeholder so as the ETL can find the right file regarding the date of the execution.

(ex: declaring the file name pattern myfile_$yyyyMMdd.csv will allow you to have the ETL retrieving the file named myfile_20220831.csv when execution on 31/08/2022, more information on file names and available placeholders in the MASS IMPORTS API).

As an ETL can be a "multi files" ETL, which means that you aim to load multiple tables of your Actito data model with a file for each, it's important to note that all required files are meant to be present when the execution will run.

In consequence, the absence of any of them will imply the ETL execution to fail.

When a file is set not required, its absence will let the ETL execution go on without any error. (Absence is in this case considered as a normal case).

With a multifiles ETL, a single physical file can be loaded in multiple tables. In this case, the settings of each loading can be different (destination table, attributes mapping, data transformations...).

Note that there is no constraint among the extension of the file to retrieve.

Even if Actito ETLs only deal with CSV formatted files, those can be named like myfile.txt for instance.

Transform

When defining your ETL, you can optionally specify data transformations that will be applied to the files you provide before loading them into Actito.

This is mostly useful when your system does not use same formats than Actito.

Available transformations are:

- Date/Datetime formatting: the ETL will reformat the provided date/datetim from declared pattern to Actito standard one.

- Value mapping: the ETL will replace matched values with mapped ones.

- Country formatting: the ETL will reformat the provided country code from declared ISO format to Actito standard one (

ISO 3166-1 alpha-2, two chars code). - Language formatting: the ETL will reformat the provided language code from declared ISO format to Actito standard one (

ISO 639-1, two chars code). - Phone number formatting: the ETL will reformat the provided phone number by formatting it to international format (with country prefix) regarding a provided country code.

- Basic string normalization: the ETL will apply textual transformations on provided text (to lower case, to upper case etc. check ETLs API for a full listing of available normalizations)

- String cropping: the ETL will crop the provided text by keeping or deleting the desired left or right characters.

- String replacement: the ETL will replace all occurrences of a desired text within the provided text value by the specified replacement text.

- Chars removal: the ETL will remove all occurrences of the specified characters within the provided text value.

Note that if a defined transformation can not be applied (for example if the provided date value does not match declared date pattern), the ETL will not fail but original value will be kept.

Depending on destination attribute in the table, this should be lead to a data validation reject. See below for more info on data validations.

Only one transformation can be defined for a file column.

Check standard Actito data formats here.

Load

The loading process to your Actito Data Model is composed of two steps :

- validation

- write

The validation steps will first check that the provided file is correctly formated (check CSV flat file RFC).

If correct, the validation will then check the format integrity of the provided data.

For example, to be able to write to the birthDate attribute of a profile table, Actito ensures the mapped CSV column contains a valid date with pattern yyyy-MM-dd; to be able to write a monetary amount to the total attribute of your Sales custom table, Actito ensures that the column contains a valid number with . decimal separator etc.

For each ETL, you can define the data operation that Actito should apply on your Actito Data Model. You can therefore declare that rows should be created and/or updated, or deleted.

As an ETL can be a "multi files" ETL, which means that you aim to load multiple tables of your Actito data model with a file for each, it's important to note that all files are validated/loaded independently and sequentially.

In consequence, if your Actito data model have integrity constraints (for example a Tickets custom table referencing a profile from a Customers profile table) you must always order the declared data loadings in the logical loading order that enforces dependend data to be loaded first

(in the example, the file that is aimed to feed Customers table should be declared before the one that aims to feed Tickets table).

Though, while coming to validating/loading CSV files, if the file that should feed Customers table is badly formatted, the ETL will anyway validate and load the file that should feed Tickets table. The records that reference already existing profiles in the Customers table will be loaded, the ones referencing new profiles present in the provided customers file will be then rejected.

Automate and schedule

Once the file retrieving, parsing and loading protocol is defined, you can activate your ETL.

Actito enables you to specify the frequency (at most once a day) with specified fire time an activated SCHEDULED ETL will run and load the data from your file(s).

An ETL can also be TRIGGERED, meaning that it will run automatically when a file with a matching name pattern is detected.

At any time, you can deactivate an ETL, what would result in no execution during deactivation period (for instance, if you have maintenance or upgrades to apply on your extract process and that result in a few days delay before being able to load data again).

Learn more about the difference between SCHEDULED and TRIGGERED ETLs, and how to set them up in the data sync ETL use case.

Execution result

Each time the ETL runs, it creates an execution. The result of an execution includes :

- when the execution occurred.

- what is the global status of the execution.

- the details of the retrieve, validation and loading for each expected file.

An execution can globally fail when a file could not be retrieved (inaccessible file server, at least one expected file was not found on the server or not present in the found compressed archive).

If a file is correctly retrieved, it can still be an invalid CSV file, or can miss expected headers or contain rows that contain invalid data (i.e. bad format of cell value regarding the corresponding table attribute's restrictions (length, possible values, integrity constraint etc.)).

In the end you can have errors while writing the data to the table : duplicate key if a file row contains a key that already exists in the table (CREATE_ONLY mode), if a file row does not match an existing table row (UPDATE_ONLY ou DELETE modes), if the number of lines in the table reached the limit etc.

The behavior of the ETL is that only valid rows are actually written to Actito. All other rows are rejected (check below for rejects management).

The execution result contains the counters for each step of the read/rejected/written rows.

Only lines with actual data modification count as 'updated'. Lines that already exist identically in the DB are only counted in the #lines read column and not in any other.

The #lines read can therefore be bigger than the sum of the other columns.

Optionally, an execution result report can be sent by e-mail to a list of recipients.

This report with contain all the information needed to check the status of the execution.

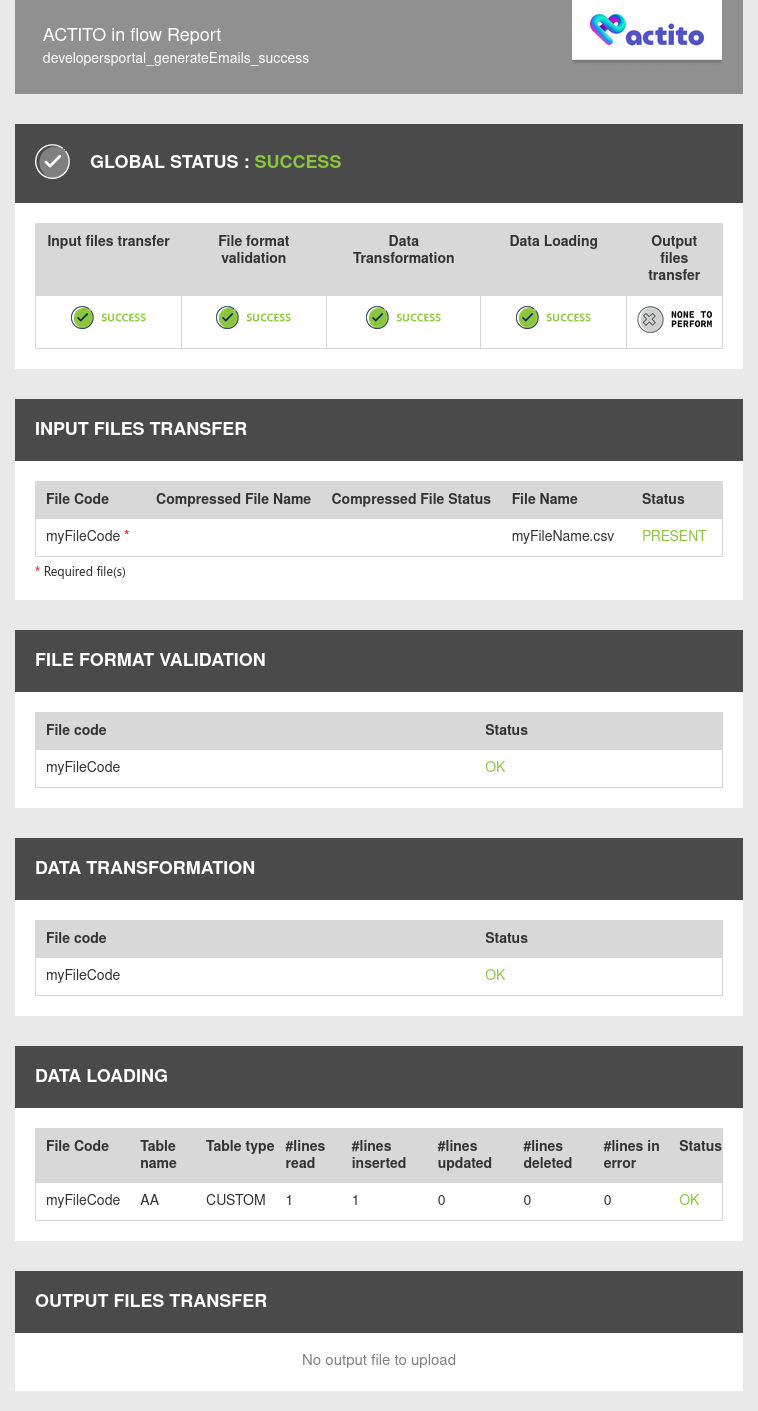

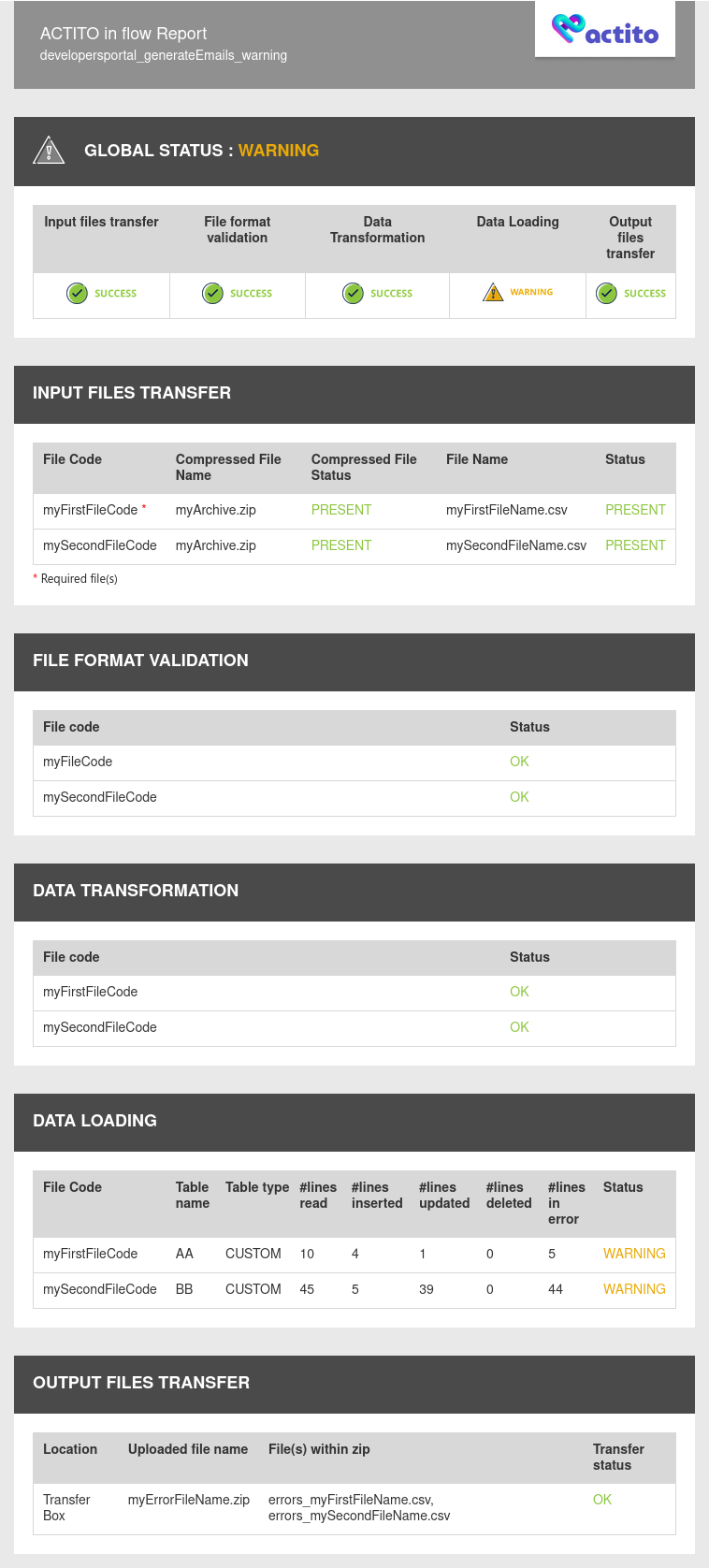

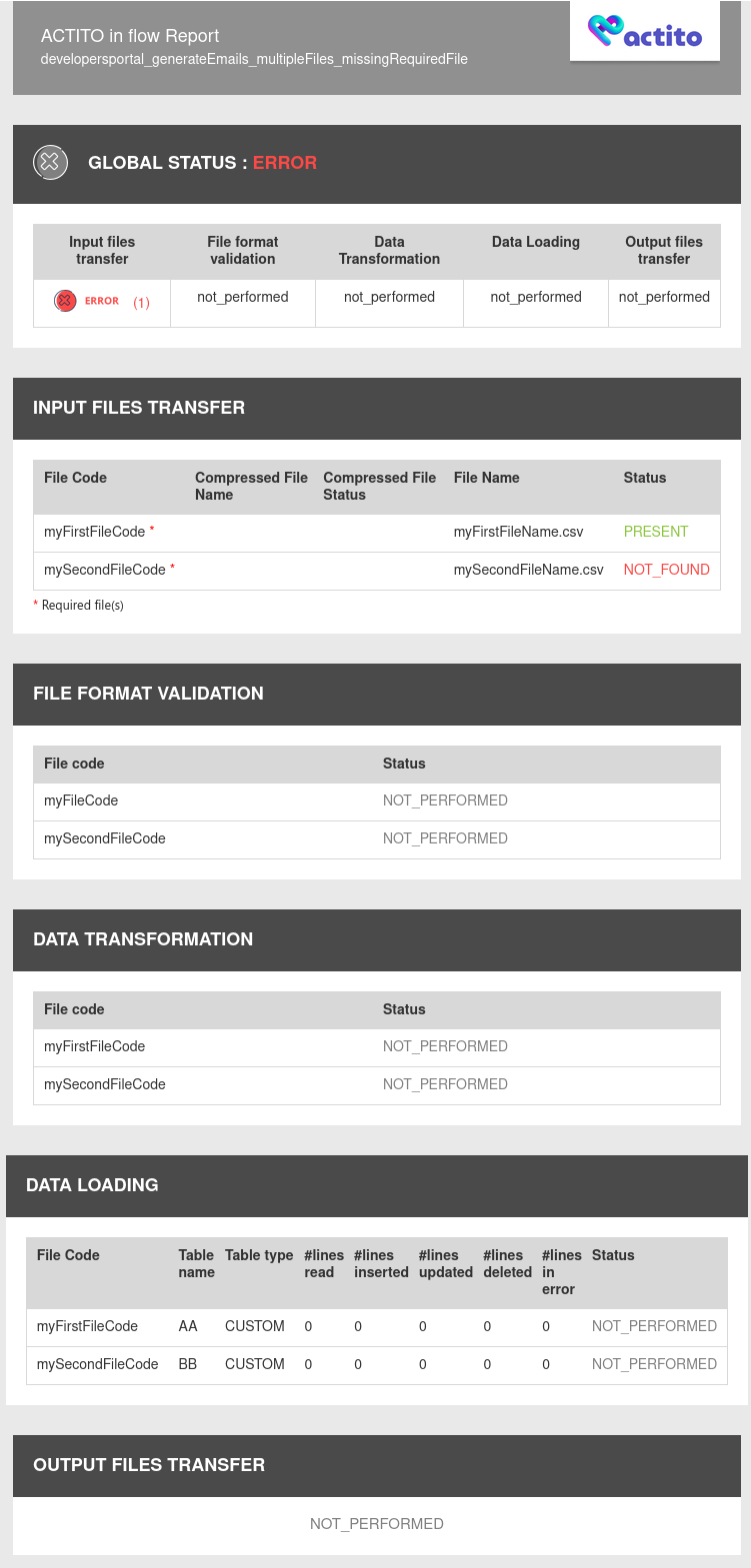

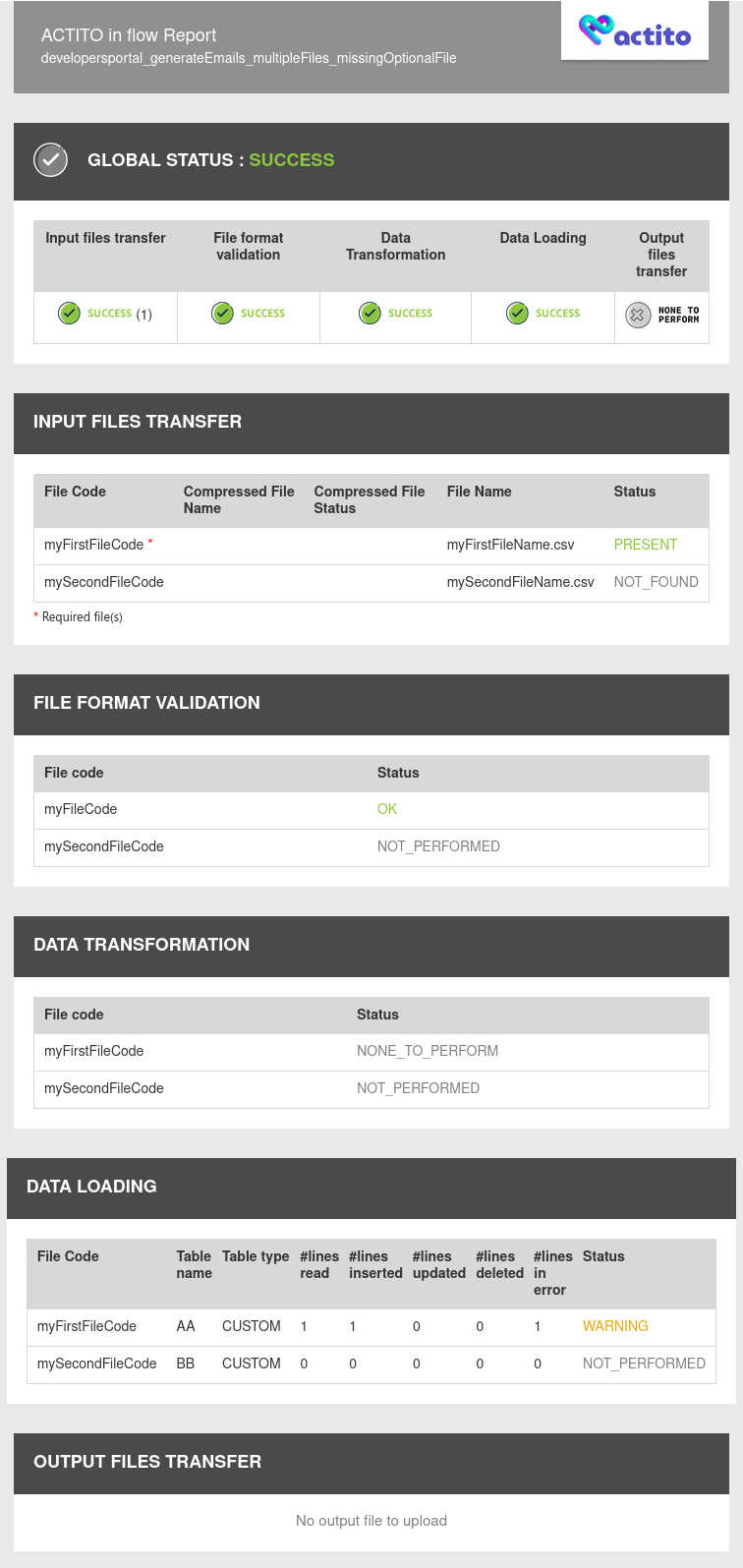

Few representative reports examples:

- ETL Report Success

- ETL Report Warning

- ETL Report Missing Required File

- ETL Report Missing Optional File

Rejects

For each execution, when data validation and load steps detect at least one wrong value or a key integrity issue, Actito ETLs produces an error file that contains all rejected rows.

The rows in this error file are the original ones with two more columns referencing the invalid column name and the reject reason.

Hereby follows the list of possible reason:

- INVALID_FIELD_VALUE (for wrong value regarding format)

- MISSING_FIELD_VALUE (for missing mandatory value)

- DUPLICATE_OBJECT (for duplicate unique value already existing in destination table)

- UNKNOWN_DATA (for missing existing value in

UPDATE_ONLYmode) - NOT_FOUND (for missing existing value in

DELETEmode) - DATA_ALREADY_EXISTS (when a unique value already exists in destination table in

CREATE_ONLYmode) - HARD_LIMIT_EXCEEDED (when the maximum number of records in destination table is reached)

When defining your ETL you have to specify the output file transfer server location where Actito will upload these reject files so as you can retrieve, process them and possibly correct the data for further re-integration (in next day file for instance).

Operating Actito ETLs

All available operations to operate Actito ETLs are accessible by using our MASS IMPORTS API.